Daniel Koretz is a professor who teaches educational measurement at the Harvard Graduate School of Education. He is the author of Measuring Up: What Educational Testing Really Tells Us. Below, he weighs in on the NYC Progress Reports that were released yesterday.

eduwonkette: One of the key points of your book is that test scores alone are insufficient to evaluate a teacher, a school, or an educational program. Yesterday, the New York City Department of Education released its Progress Reports, which grade each school on an A-F scale. 60 percent of the grade is based on year-to-year growth and 25 percent is based on proficiency, so 85 percent of the grade is based on test scores. Do you have any advice to New Yorkers about how to use - or not to use - this information to make sense of how their schools are doing?

Koretz: This is a more complicated question in New York City than in many places because of the complexity of the Progress Reports. So let’s break this into two parts: first, what should people make of scores, including the scores New York released a few weeks ago, and second, what additional should New Yorkers keep in mind in interpreting the Progress Reports?

In the ideal world, where tests are used appropriately, I give parents and others the same warning that people in the testing field have been offering (to little avail) for more than half a century: test scores give you a valuable but limited picture of how kids in a school perform. There are many important aspects of schooling that we do not measure with achievement tests, and even for the domains we do measure—say, mathematics—we test only part of what matters. And test scores only describe performance; they don’t explain it. Decades of research has repeatedly confirmed that many factors other than school quality, such as parental education, affect achievement and test scores. Therefore, schools can be either considerably better or considerably worse than their scores, taken alone, would suggest.

However, there is another complication: when educators are under intense pressure to raise scores, high scores and big increases in scores become suspect. Scores can become seriously inflated—that is, they can increase substantially more than actual student learning. This remains controversial in the education policy world, but it should not be, because the evidence is clear, and similar corruption of accountability measures has been found in a wide variety of different economic and policy areas (so widely that it goes by the name of “Campbell’s Law”). High scores or big gains can indicate either good news or inflation, and in the absence of other data, it is often not possible to distinguish one from the other. As you know, this was a big issue in New York City this year, in part because some of the gains, such as the increase in the proportion at Levels 3-4 in 8th grade math, were remarkably large.

New York City is a special case. It is always necessary to reduce the array of data from a test to some sort of indicators, and NYC has developed its own, called the Progress Reports, which assign schools one of five grades, A through F. My advice to New Yorkers is to pay attention to the information that goes into creating the Progress Reports but to ignore the letter grades and to push for improvements to the evaluation system.

The method for creating Progress Reports is baroque, and it is hard to pick which issues to highlight in a short space. The biggest problems, in my opinion, lie in the estimation of student progress, which constitutes 60% of the grade. The basic idea is that a student’s performance on this year’s test is compared to her performance in the previous grade, and the school gets credit for the change. It sounds simple and logical, but the devil is in the details. (For a non-technical overview of the issues in using value-added models to evaluate teachers and schools, see “A Measured Approach”.)

To keep this reasonably brief, I’ll focus on three problems. First, the tests are not appropriate for this purpose. skoolboy made reference to part of this problem in a posting on your blog. To be used this way, tests in adjacent grades should be constructed in specific ways, and the results have to be placed on a single scale (a process called vertical linking). Otherwise, one has no way of knowing whether, for example, a student who gets the same score in grades 4 and 5 improved, lost ground, or treaded water. The tests used in New York were not constructed for this purpose, and the scale that NYC has layered on top of the system for this purpose is not up to the task.

And that points to the second problem, which again skoolboy noted: the entire system hinges on the assumption that one unit of progress by student A means the same amount of improvement in learning as one unit by student B. This is what is called technically an interval scale, meaning that a given interval or difference means the same thing at any level. Temperature is an interval scale: the change from 40 to 50 degrees signifies the same increase in energy as the change from 150 to 160. There is no reason to believe that the scale used in the Progress Reports is even a reasonable approximation to an interval scale. It starts with the performance standards, which are themselves arbitrary divisions and cannot be assumed to be equal distances apart. The NYC system assigns to these standards new scores that nonetheless assume that the standards are equidistant—so, for example, a school gets the same credit for moving a student from Level 1 to Level 2 as for moving a student from Level 2 to Level 3. Moreover, the NYC system assumes that a student who maintains the same level on this scale has made “a year’s worth of progress.” That assumption is also unwarranted, because standards are set separately by grade, and there is no reason to believe that a given standard, say, Level 3, means a comparable level of performance in adjacent grades. (There is in fact some evidence to the contrary.)

The result is that there is no reason at all to trust that two equally effective schools, one serving higher achieving students than another, will get similar Progress Report grades. Moreover, even within a school, two students who are in fact making identical progress may seem quite different by the city’s measure. There may be reasons for policymakers to give more credit for progress with some students than for progress with others, but if one does that, you no longer have a straightforward, comparable measure of student progress.

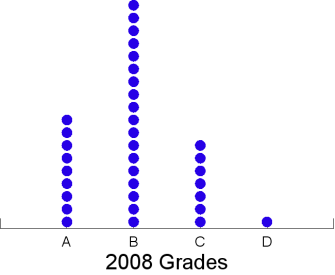

And finally, there is the problem of error. People working on value-added models have warned for years that the results from a single year are highly error-prone, particularly for small groups. That seems to be exactly what the NYC results show: far more instability from one year to the next than could credibly reflect true changes in performance. Mayor Bloomberg was quoted in the New York Times on September 17 as saying, “Not a single school failed again. That’s exactly the reason to have grades…It’s working.” This optimistic interpretation does not seem warranted to me. The graph below shows the 2008 letter grades of all schools that received a grade of F in 2007. It strains credulity to believe that if these schools were really “failing” last year, three-fourths of them improved so markedly in a mere 12 months that they deserve grades of A or B. (The proportion of 2007 A schools that remained As was much higher, about 57 percent, but that was partly because grades overall increased sharply.) This instability is sampling error and measurement error at work. It does not make sense for parents to choose schools, or for policymakers to praise or berate schools, for a rating that is so strongly influenced by error.

We should give NYC its due. The Progress Reports are commendable in two respects: considering non-test measures of school climate, and trying to focus on growth. Unfortunately, the former get very little weight, and the growth measures are not yet ready for prime time.

2008 Letter Grades of Schools that Received an F Grade in 2007