An intriguing document surfaced the other day, and it raises questions about how all the testing companies that are jumping into the common-core market will handle the student-level data they collect.

It’s an overview of testing options developed for the Michigan state legislature. State lawmakers there requested this report as part of their soul-searching about how to proceed on the common core. In the end, the Dec. 1 report suggests that Michigan’s original idea—to go with the Smarter Balanced Assessment Consortium’s system—is the best option. But in doing so, it offers interesting tidbits of information—and points to open questions—about the practices of the expanding field of competitors.

For starters, call up the report. Go to the “scoring and reporting” section on page 14. You’ll see a column that compares 12 testing companies’ representations about whether the Michigan Department of Education and its schools will be given all the data underlying the companies’ summative assessment reports so they’ll be able to do further analysis if they wish.

The study group apparently found that the two federally funded testing consortia—Smarter Balanced and PARCC—and CTB/McGraw-Hill were the only ones that said they’d give the state back its data in this way. Four companies—College Board, ACT, Curriculum Associates and Houghton Mifflin Harcourt/Riverside—didn’t offer the state sufficient reassurance on this. Five didn’t respond.

This gets at a hot-button issue right now: Who gets to see student-level data collected as a part of states’ testing regimens?

In the “transparency and governance” section on page 8, the report sums up the extent to which it obtained “clear evidence [that] the state of Michigan retains sole and exclusive ownership of all student data” under each of the providers’ testing systems. Of the 12 providers, only five—Smarter Balanced, PARCC, CTB/McGraw-Hill, Measured Progress, and Houghton Mifflin Harcourt/Riverside—met that mark. The rest didn’t, or didn’t respond.

ACT and the College Board

One of the providers that failed to produce sufficient evidence of that, according to the Michigan report, is ACT Inc., which has been aggressively marketing its Aspire testing system as an alternative to the tests by the two state consortia (and recently hired former Indiana and Florida schools chief Tony Bennett to help them do that). Another provider that didn’t calm Michigan’s jitters on this question was the College Board, which disclosed recently that it was getting into the common-core testing market at the middle and high school levels. The College Board, too, has been building its stable of heavy hitters, most recently nabbing top federal education department statistician Jack Buckley.

The Michigan feedback from testing providers flows directly into the ongoing dialogue about how student data will be handled. We reported to you recently that PARCC approved a new policy that lays out who controls such data. And we’ve disclosed some of the clarifications that the U.S. Department of Education has been making about its place in the data pipeline. Smarter Balanced has approved a brief statement of principle on data, and will be working out separate agreements with its 25 member states so each can be customized to take state regulations and laws into account.

But while all that is happening, the Michigan report offers a unique glimpse into a quickly evolving—and intensely competitive—testing marketplace for the Common Core State Standards. It offers more information about these systems—and the offerings of other providers—than has been widely available before.

The report allows an early comparison of the testing products being offered by a dozen organizations. What are their cost estimates? What types of items will they have, and will they include performance tasks? Which ones will offer paper-and-pencil versions, or interim tests to gauge how students are doing during the year? Which will produce results sooner than Michigan currently gets them?

Testmakers’ Offerings

If you yearn for more detail, you’ll doubtless be overjoyed to find vast amounts of additional information in Appendix B of the report. This 206-page document contains each company’s responses to the 75 questions Michigan put to it in its request for information. Make yourself a cup of tea—or whatever—and dive in.

Little by little, profiles begin to emerge of the common-core-assessment systems being offered by private testing companies. Take a look at ACT Aspire’s response, for instance (page 2 - 23). You can see that they’re offering a computer-based test in grades 3-10, with a paper-and-pencil version also available. The summative test price runs $22 per student; $6 more for the paper version. Interim tests will cost another $7 per student.

The test will offer multiple choice, short-answer, and essay items, with some use of technology features such as drag-and-drop. It will not be computer adaptive, nor will it offer the more lengthy, in-depth performance tasks, or formative assessment resources for teachers. No true-false items are planned on the summative test, but its interim tests will be exclusively in that format. Some items on its summative tests can be machine-scored, while others will have to be scored by hand. ACT Aspire anticipates four or five “extended” hand-scored items in math at grades 3-8 and 10, and one at grades 3-10 in English/language arts.

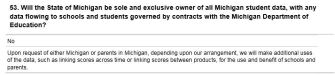

The ownership- of-data issue arises in question 53, as you can see:

ACT said that aggregated student results would be available for download through its web portal, though it couldn’t yet specify how quickly the results would be available after test administration.

With these kinds of answers on public record for 12 test providers, a little less is unknown about the testing landscape ahead.