This week we are taking a break from our regularly scheduled programming to reflect on an important question with increasing relevance to research-practice partnerships (RPPs): The matter of effectiveness. In today’s post, Michelle Nayfack (@mnayfack), Associate Director of Research-Practice Partnerships at California Education Partners (@caedpartners) who leads the Stanford-Sequoia K-12 Research Collaborative and Laura Wentworth (@laurawent), Director of Research-Practice Partnerships at California Education Partners, who leads the Stanford University-San Francisco Unified School District Partnership (@StanfordSFUSD), reflect on the need for specific measures of RPP effectiveness.

Today’s post offers a real-world perspective from two colleagues working in RPPs on Monday’s post: Measuring the Impact of Research-Practice Partnerships in Education

Research-practice partnerships (RPPs) in education are growing in number and scale in the United States. With this enthusiasm comes the responsibility to demonstrate partnership effectiveness and impact (for example, the current IES director’s to call for more research on the impacts of these partnerships).

As the field responds to this call for evidence, we think there is one premise researchers and practitioners measuring RPPs should keep in mind — frameworks or constructs guiding the measurement of partnership effectiveness should be specific enough to define not only the partnership’s key attributes leading to impact, but also how those attributes interact to produce the desired results.

Currently, the frameworks guiding the measurement of these partnerships are quite broad. Aside from Coburn, Penuel, and Geil’s seminal white paper on research-practice partnerships in education, there are a few other frameworks which could also be useful when thinking about the measurement of research-practice partnership effectiveness: Coburn and Farrell’s concept of absorptive capacity, Farley-Ripple and colleagues’ emphasis on conditions supporting research use, or Russell and colleagues’ study of Networked Improvement Communities. Additionally, there are RPPs working to internally study and document their own effectiveness and impacts (for example, Barton and colleagues’ brief, Nayfack and colleagues’ report, or Henrick, Klafehn and Cobb’s book chapter).

Henrick and colleagues come the closest to outlining the specific set of constructs for assessing the effectiveness of research-practice partnerships. Their white paper outlines a set of five dimensions to guide RPPs in their efforts to understand how well they are working. While a helpful first step towards measurement, the framework provides little explanation of how the dimensions interact with each other, making it difficult to know which RPP strategies should be prioritized over time. For example, even across different RPP designs, contexts, and types, we believe partnerships will most likely build trust and cultivate relationships (one of the Henrick et al. dimensions) before they support the practice partner organization in achieving its goals (another dimension).

We advocate for a set of measures similar to the Henrick dimensions, and those that additionally capture some agreed upon, common partnership outcomes or dimensions across a research-practice partnership logic model or theory of action: this would include inputs and activities (actors and strategies) and expected partnership outputs (intermediary outputs like evidence-based decision-making, or longer term outputs like changes in practice, policy or student outcomes).

Our argument for more defined, specific measures of RPPs is premised on three principles.

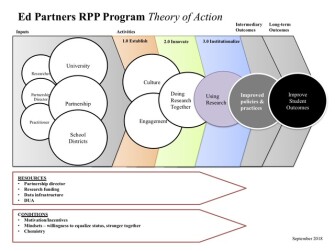

First, it is possible for the field to agree upon a set of RPP dimensions as well as an understanding for how those dimensions relate to each other, while still having variation in RPP designs, types and contexts. For example, we started the partnership between Stanford University Graduate School of Education (GSE) with San Francisco Unified School District using the theory of action depicted in Figure 1. This theoretical model describes a set of elements in an RPP which we think interact in certain ways to produce a desired result: from L to R — inputs (researchers, practitioners, and intermediaries), activities (doing research together, engagement, and culture), an intermediary output (using research), two longer term outputs/outcomes (changes in policy and practice, and improvements to student outcomes), buoyed by certain conditions and resources. We used this theory of action to design and launch another partnership, the Stanford Sequoia K-12 Research Collaborative where Stanford GSE faculty partners with nine school districts that neighbor Stanford campus, and to advise other universities and school districts in California as they start and sustain their RPPs. While we do not think there is an exact recipe for RPPs, our experience of using one RPP theory of action across multiple contexts gives us confidence that the field could more clearly define not just what the dimensions contributing to effectiveness are, but how these dimensions relate to each other when enacted across RPPs.

Figure 1

Second, when RPPs across the nation have a common set of measures, it will help them grow their collaborations and opportunities to learn from each other. When the leaders of RPPs gather at the NNERPP Annual Forum every summer, we use common frameworks and language to discuss our practice and examine our work. This practice of learning from each other by sharing and comparing our partnerships started early on before NNERPP when Vivian Tseng at the William T. Grant Foundation gathered a working group of RPPs to learn from each other (the effort produced this RPP micro-site with an incredible amount of RPP resources). Thanks to this working group, the Stanford-SFUSD Partnership had the opportunity to partner with Education Northwest and the Baltimore Education Research Consortium to co-design a survey to examine the effectiveness of our RPPs. We wanted to have common measures to look across our partnerships and have common data to help analyze our strengths and challenges together. These discussions led to the development and testing of an internal survey, and some of the items of this survey are still in use today. (See Wentworth, Mazzeo, and Connolly, 2017 for a more thorough description of this work.) As the RPP field advances, we hope the specificity of the measures can allow for greater cross-RPP learning.

Third, the measures of effectiveness will influence the development, design, and impact of the next generation of research-practice partnerships in the education sector. You may have heard of the phrase, “what gets measured, gets done.” If we want the next generation of RPPs to be effective and impactful, we need to put a stake in the ground about not just what the RPP dimensions of effectiveness are, but how those dimensions relate to each other across RPPs. For example, IES has funded multiple RPPs through their grant making program, and there is now emerging evidence that these partnerships supported education leaders’ use of research in their decision-making. The field could take the opportunity to learn about the how the dimensions of these IES-funded RPPs’ work together to support the effectiveness of their partnerships. For example, if IES were to support the development of these RPP measures in collaboration with this cohort of IES-funded RPP grantees, the agency and other foundations could both use the findings to guide the criteria of their next call for proposals for RPP projects and fund more precise, efficacious studies of the next generation of RPPs.

While there is emerging research of RPP effectiveness, there is more work our field can do to measure the effectiveness of RPPs. We hope in the next generation of RPP measures, we can respond to the call for demonstrating RPP effectiveness and impact, and be able to determine what dimensions make RPPs effective as well as how those dimensions interact to make improvements in education.

Photo: Unsplash

Figure 1: California Education Partners